Qwen 1.5 MoE

Highly efficient mixture-of-expert (MoE) model from Alibaba

What is Qwen 1.5 MoE?

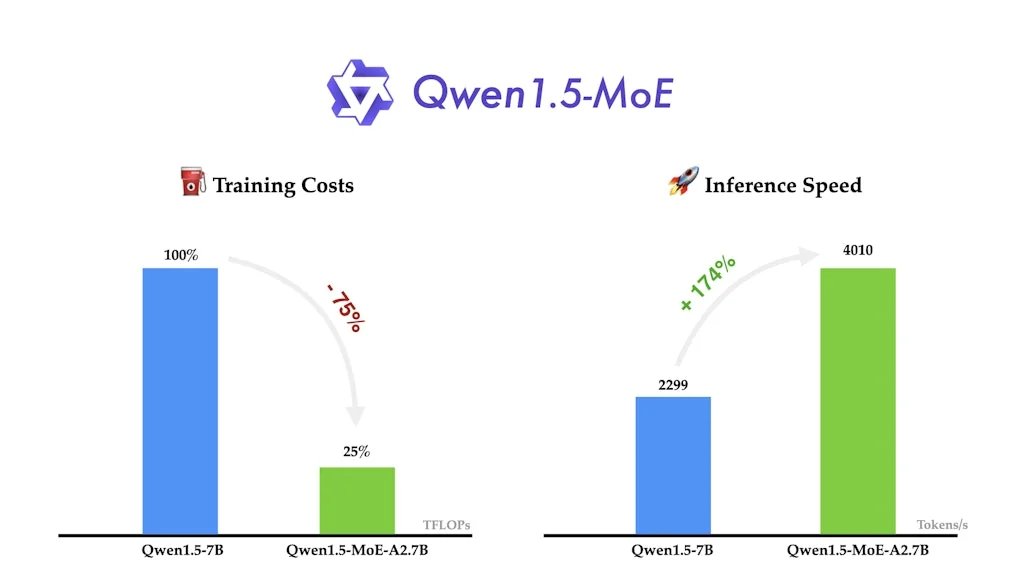

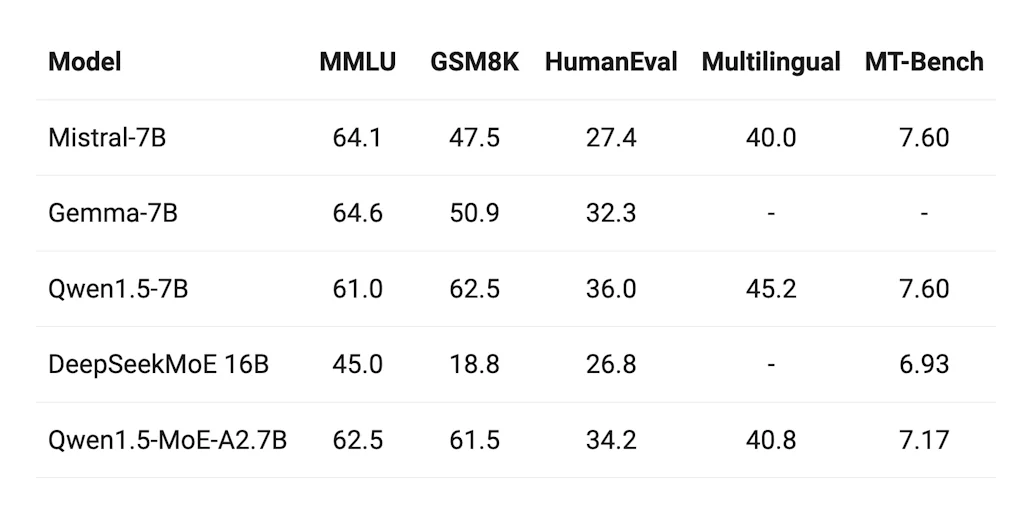

Qwen1.5-MoE-A2.7B is a small mixture-of-expert (MoE) model with only 2.7 billion activated parameters yet matches the performance of state-of-the-art 7B models like Mistral 7B and Qwen1.5-7B.

Tool Details

| Categories | LLMs, AI Infrastructure Tools |

|---|---|

| Website | huggingface.co |

| Became Popular | April 3, 2024 |

| Platforms | Web |

Recent Reviews (1)

S

★★★★★

Highly performant base models that can be used for task-specific training. Such as the function calling experience built into Arch

Frequently Asked Questions about Qwen 1.5 MoE

When did Qwen 1.5 MoE become popular?

Qwen 1.5 MoE became popular around April 3, 2024.

What is Qwen 1.5 MoE's overall user rating?

Qwen 1.5 MoE has an overall rating of 5.0/5 based on 2 user reviews.

What type of tool is Qwen 1.5 MoE?

Qwen 1.5 MoE belongs to the following categories: LLMs, AI Infrastructure Tools.

Related LLMs Tools

Related AI Infrastructure Tools Tools

Compare Qwen 1.5 MoE :

Don't Get Fooled by Fake Social Media Videos

The world's first fact checker for social media. Paste any link and get an instant credibility score with sources.

Try FactCheckTool Free